Multi-modal localization and enhancement of multiple sound sources from a Micro Aerial Vehicle

Published in Proc. of ACM Multimedia, Mountain View, USA, October 23-27, 2017

Recommended citation: R. Sanchez-Matilla, L. Wang and A. Cavallaro. "Multi-modal localization and enhancement of multiple sound sources from a Micro Aerial Vehicle." Proc. of ACM Multimedia.

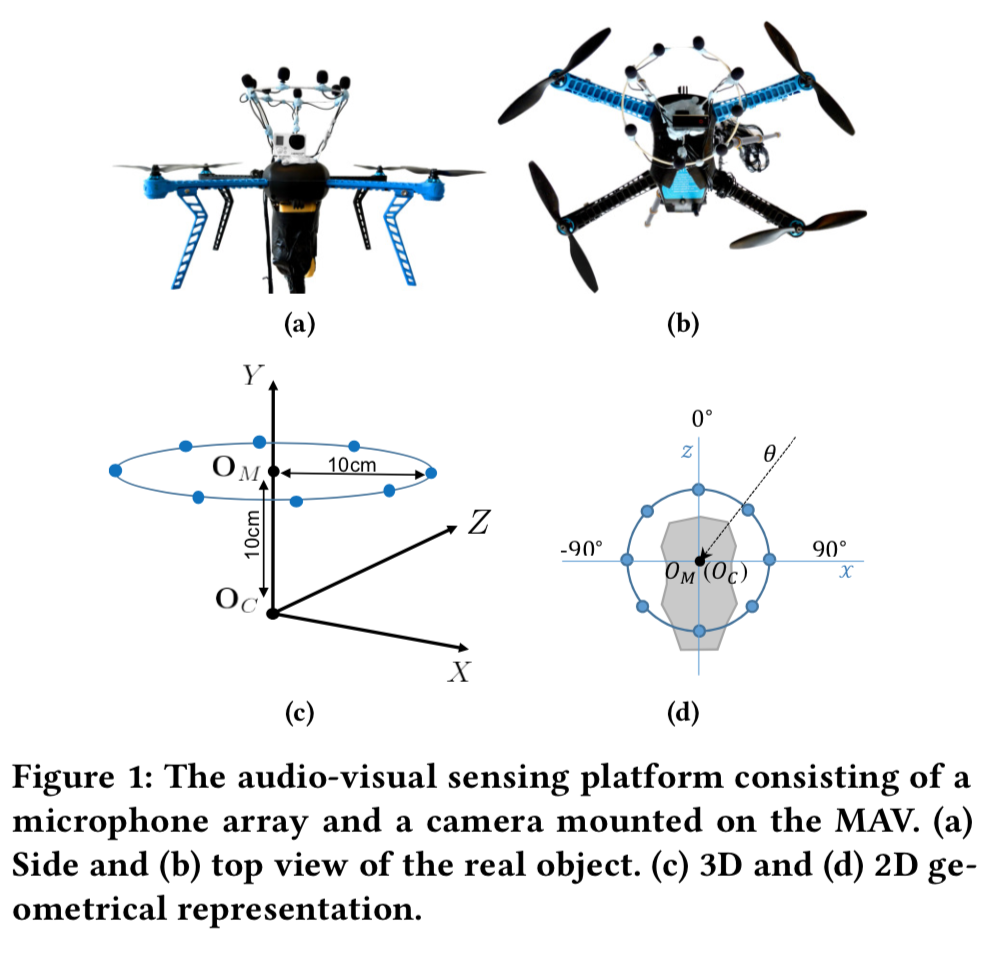

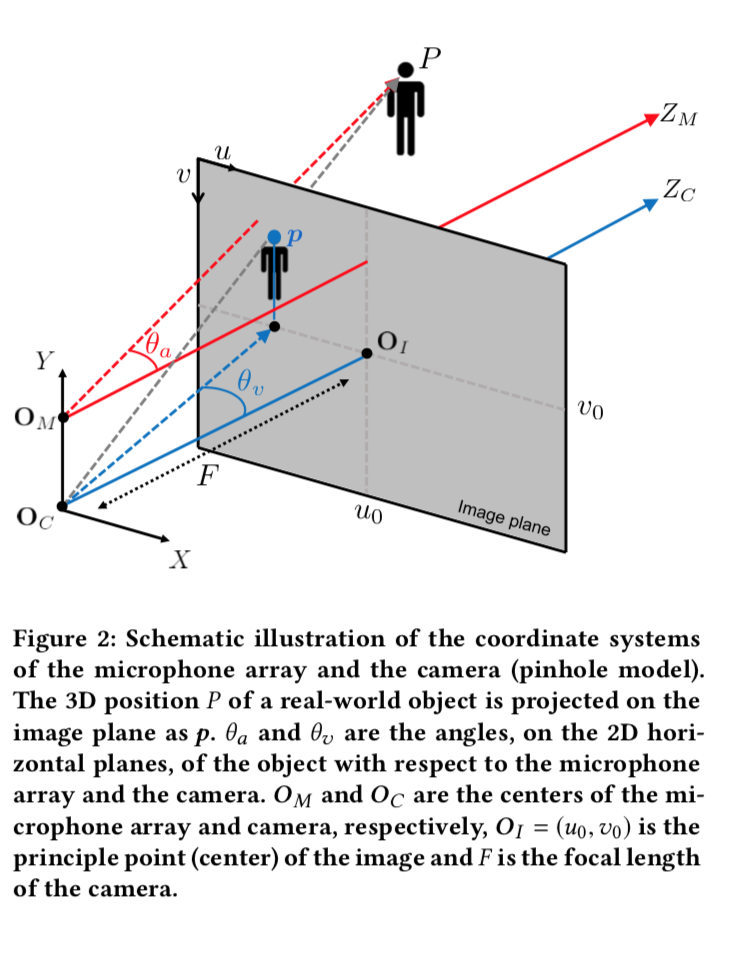

Abstract The ego-noise generated by the motors and propellers of a micro aerial vehicle (MAV) masks the environmental sounds and considerably degrades the quality of the on-board sound recording. Sound enhancement approaches generally require knowledge of the direction of arrival of the target sound sources, which are difficult to estimate due to the low signal-to-noise-ratio (SNR) caused by the ego-noise and the interferences between multiple sources. To address this problem, we propose a multi-modal analysis approach that jointly exploits audio and video data to enhance the sounds of multiple targets captured from an MAV equipped with a microphone array and a video camera. We first perform audio-visual calibration via camera resectioning, audio-visual temporal alignment and geometrical alignment to jointly use the features in the audio and video streams, which are independently generated. The spatial information from the video is used to assist sound enhancement by tracking multiple potential sound sources with a particle filter. Then we infer the directions of arrival of the target sources from the video tracking results and extract the sound from the desired direction with a time-frequency spatial filter, which suppresses the ego-noise by exploiting its time-frequency sparsity. Experimental results with real outdoor data verify the robustness of the proposed multi-modal approach for multiple speakers in extremely low-SNR scenarios.

Sample images

Video