Confidence Intervals for Tracking Perforamance Scores

Published in Proc. of IEEE Int. Conf. on Image Processing (ICIP), Athens, Greece, October 7-10, 2018

Recommended citation: R. Sanchez-Matilla and A. Cavallaro. "Confidence Intervals for Tracking Perforamance Scores." Proc. of IEEE Int. Conf. on Image Processing (ICIP).

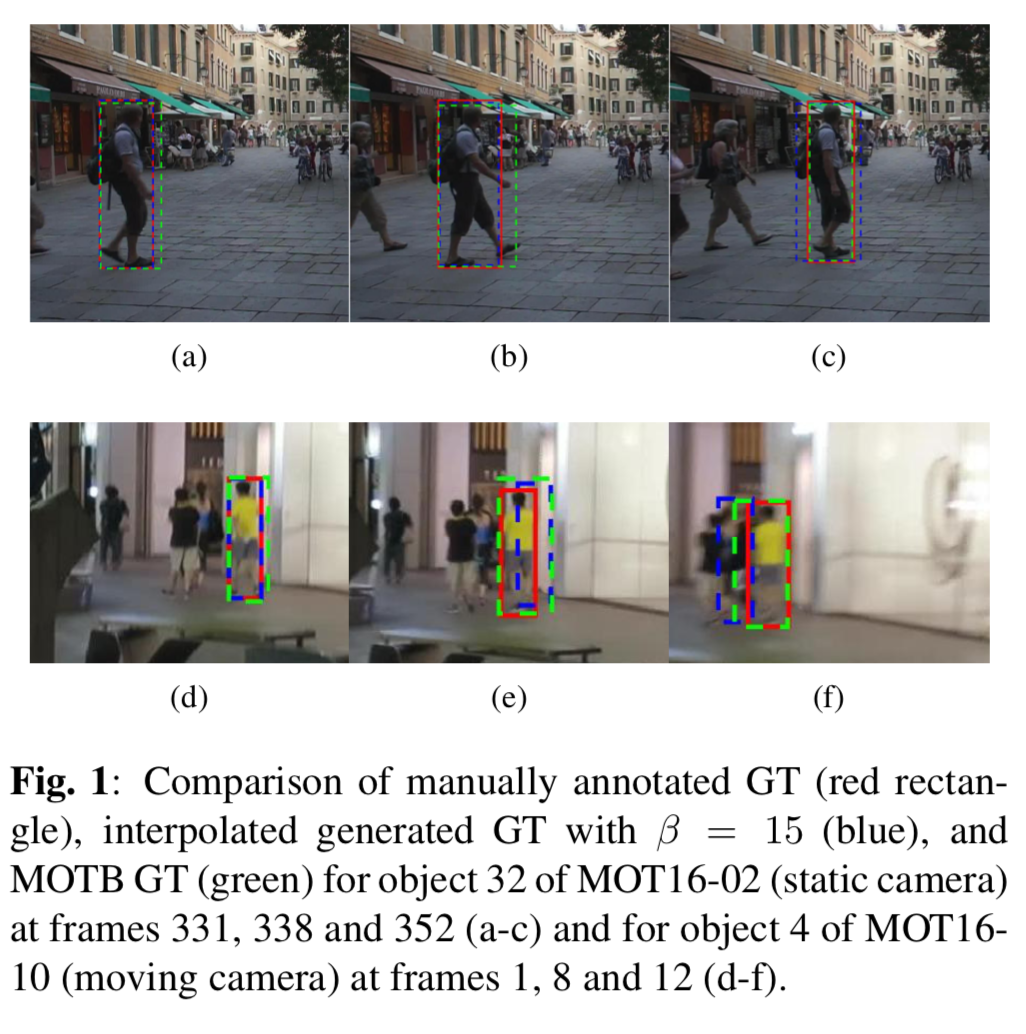

Abstract The objective evaluation of trackers quantifies the discrepancy between tracking results and a manually annotated ground truth. As generating ground truth for a video dataset is tedious and time-consuming, often only keyframes are manually annotated. The annotation between these keyframes is then obtained semi-automatically, for example with linear interpolation. This approximation has two main undesirable consequences: first, interpolated annotations may drift from the actual object, especially with moving cameras; second, trackers that use linear prediction or regularize trajectories with linear interpolation unfairly gain a higher tracking evaluation score. This problem may become even more important when semi-automatically annotated datasets are used to train machine learning modules. To account for these annotation inaccuracies for a given dataset, we identify objects whose annotations are interpolated and propose a simple method that analyzes existing annotations and produces a confidence interval to complement tracking scores. These confidence intervals quantify the uncertainty in the annotation and allow us to appropriately interpret the ranking of trackers with respect to the chosen tracking performance score.

Sample image

Links Paper Presentation