A predictor of moving objects for first-person vision

Published in Proc. of IEEE Int. Conf. on Image Processing (ICIP), Taipei, Taiwan, September 22-25, 2019

Recommended citation: R. Sanchez-Matilla and A. Cavallaro. "A predictor of moving objects for first-person vision." Proc. of IEEE Int. Conf. on Image Processing (ICIP).

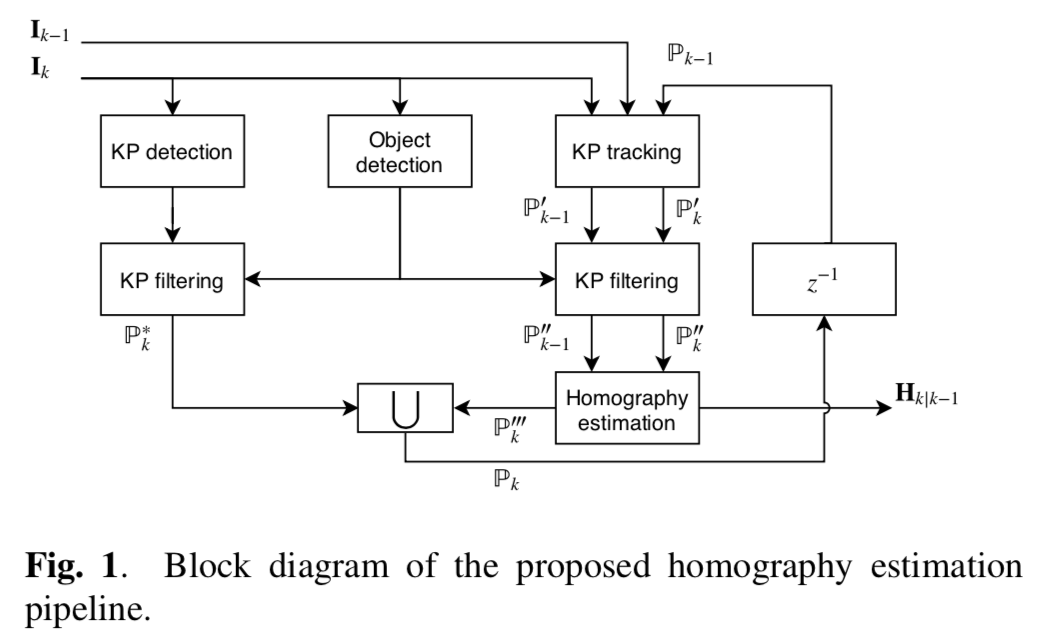

Abstract Predicting the motion of objects despite the presence of camera motion is important for first-person vision tasks. In this paper, we present an accurate model to forecast the location of moving objects by disentangling global and object motion without the need of camera calibration information or planarity assumptions. The proposed predictor uses past observations to model online the motion of objects by selectively tracking a spatially balanced distribution of keypoints and estimating scene transformations between frame pairs. We show that we can forecast up to 60% more accurately than state-of-the-art alternatives while being resilient to noisy observations. Moreover, the proposed predictor is robust to frame rate reductions and outperforms alternative approaches while processing only 33% of frames when the camera moves. We also show the benefit of integrating the proposed predictor in a multi-object tracker.

Sample image

Links Paper Presentation